Lab note #050 Prior art of a React-like runtime

I fell short this week in that I didn't do what I said I was going to do. Instead, of writing code, I went down the incremental rabbit hole. It was innocuous at first, but ended up being a full deep dive, mostly into the work of Steven Wittens (aka that site with that header), along with some others. I have a suspicion this is an affliction, in which I fear I'm missing something others have already discovered to help avoid deadends. But I'm reminded that most valuable knowledge is tacit, and can only be gained through action.

So was the juice worth the squeeze? I've read Wittens with interest before, but his writing style is heavily reference laden. Without the appropriate background knowledge, it's easy to miss what he's saying. This time around, I'm better informed about how React is implemented, and I employed GPT to help expand references, and ask questions about what he might mean. I think that's made some things much clearer, and it's given me some new perspectives and ideas too.

Since this isn't really a suvey post and more a log of my own discoveries, I won't do an exhaustive list of what was clarified. Otherwise, I'll be tempted to explain everything from the start. But I'll list out a couple a-ha moments for me.

- Cache invalidation is hard, because the cache is usually the least knowledgable part of the system. It tries to stay agnostic to what's being cached, but as a result, it has no idea when something should be invalidated. If you can track context and dependencies perfectly, cache invalidation is trivial.

- A dirty bit is really a poor man's continuation. At the time a dirty bit is flagged, we simply don't know enough to redo the computation. We may need to batch things up, or take other things into account. So we set the dirty bit for a second pass when we do know enough.

- While JSX is an tree arrangement of the components in your view, it's actually an effects system that describes the rest of the computation that needs to be done. It's a way of communicating with the underlying runtime, what's next. It's like a continutation.

He also added some concepts and features to React that I don't think he explained pretty well. But once I triangulated what he was saying, two really stood out to me:

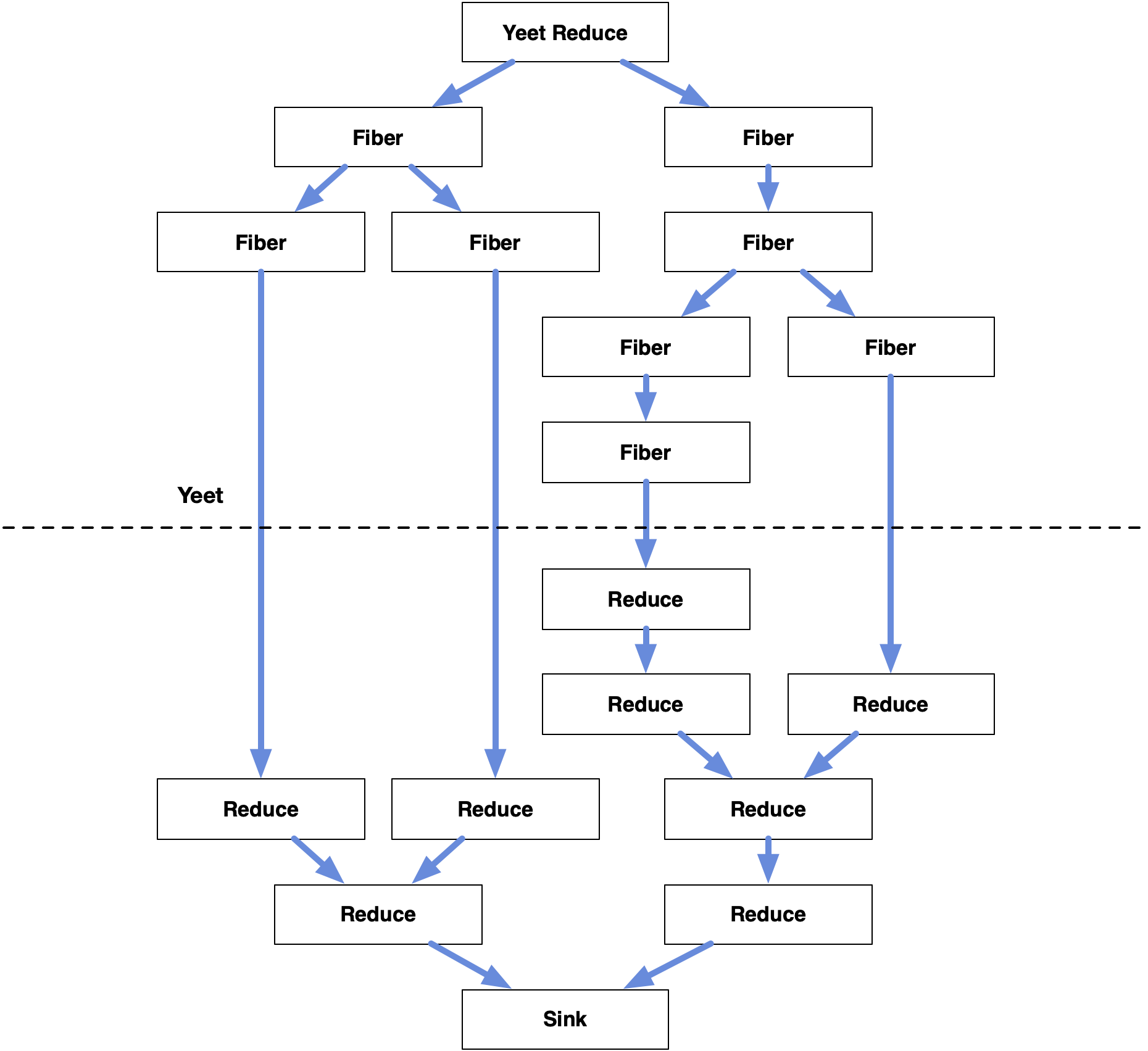

Yeet-reduce: How do you communicate from children back to a parent without breaking one-way data flow? Construct a tree reduction nodes that mirrors your component tree, starting from the leaves. Join the leaf nodes together into reduction nodes to get the first layer. Repeatly join reduction nodes in successive layers until you're at a single reduction layer. This last reduction node is associated with some ancestor component node that can then take this result and trigger another render pass (among other things).

Quote-unquote: Inspired by Lisp quotes and unquoting, this is like being able to take the literal description of a component tree (quote) and grafting it onto another component tree (unquote). The other component tree can be the component tree in the next render pass, or it can be on another machine. And you can describe this as a single component tree. What this allows is for a server to communicate component tree changes to a server, so a split between backend and frontend could be eliminated. In other words, a single code base whose code can straddle both the frontend and backend.

The part I struggled with was how to reconcile the React view of reactive systems with the ones described by Jane Street in Seven Implementations of Incremental, the parametrics laid out in Building a la carte, or even with algebraic effects from two weeks ago. After going back and forth with Claude and GPT, I think my current view is as follows:

React-like systems model computation as a hierarchy of effects. The effects generate a tree of units of work (that React calls fibers) that maintain their own state. This state can be used to generate other units of work dynamically.

If the call stack were persistent data structures, the stack would be a computational tree. Each path down a tree is a trace of the stack at any one point in time. And if each node could hold its own state, we could view a node as the local variables in a stack frame. Hence, continuations would then be the ability to go more than one level up to chose a different path down this computational tree.

It seems hooks and components could be implemented as algebraic effects. It allows us to call a function before all the data needed to compute it would be ready. We can ask for data inside of the function, and once we get the results, we resume computation from that point.

All effects would be funneled into a queue by which a scheduler chooses the order by which we work on something. For now, it'll be like other reactive systems–the order by which you execute is largely by the way the effects are hooked up in the computational graph.

The rebuilder chooses whether to execute or not. I think immutability is the key to making this easy, though immutability brings other problems in to the mix. This is unclear to me at the moment.

How to do continuations is also unclear to me at the moment. Algebraic effects rely on a specific kind of continuation, but with generators, you only get one-shot continuations. It's doesn't allow us to resume at the same point multiple times. This isn't the greatest, especially if I want to handle streaming data. React-like systems typically just re-render from the root of the component tree, and work their way down to the resumption point, counting on the incrementalism not to do more work is necessary. This also doesn't seem great, but it might be fine to emulate delimited continuations. Anyway, I think this will become clearer as I write more code.

Reader mail

Kartik says:

As someone who doesn't live and breathe this, I wish there were links on each row of the first table. Like, which one is Conal Elliott's version? What are examples of the others? Which one is React?

https://mastodon.social/@akkartik@merveilles.town/113346360799657352

None of them! Conal Elliott's original formulation of Functional Relational Programming (FRP) is that it has two main ideas:

- A piece of software's specs should be defined with denotational semantics (the mathematical sense of meaning) rather than operational semantics (the procedural sense of meaning). Best I can describe it is a mathematically declarative approach to design and architecture of software. This is what he means by functional.

- Model incoming data as a continuous value–a signal, so that when we want to merge values, it's trivial to do so. We don't want to worry about different sampling rates due to discretization. [1] If our programs can handle continuous incoming signals, then it's easy to build systems that change its output as soon as inputs change. This is what he means by reactive.

FRP confusion stems from the name. Elliott probably should have called it Denotationally Designed, Continuous-signal-based Programming. Nowadays we mean functional in the sense that the output to a computation should only depend on its input. And we take reactive to mean the same thing Elliot did–that the system output changes immediately to input changes. However, most formulations of FRP nowadays don't include continuous signals at all. Hence, his lamentation and dismay.

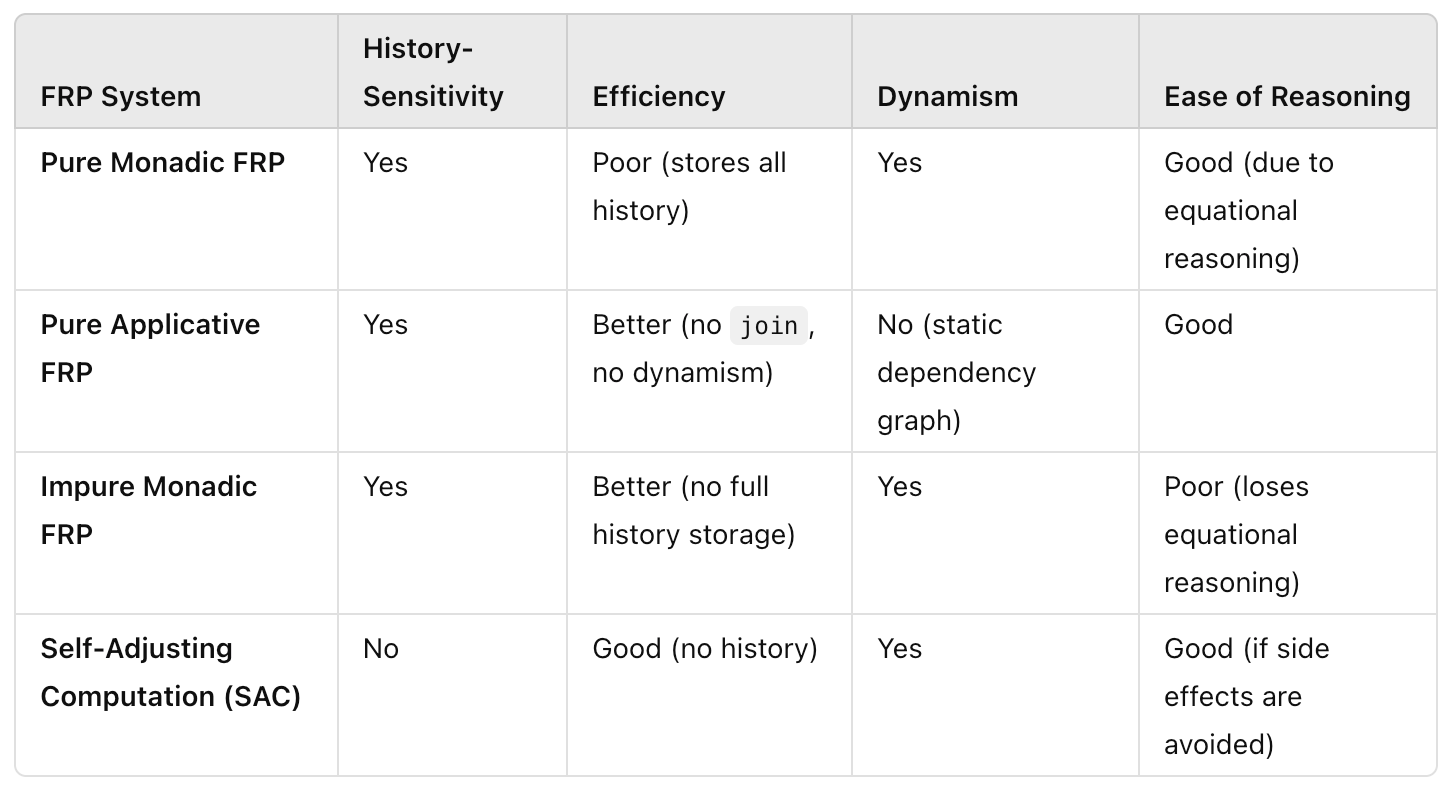

The chart from the first table in last week's lab note comes from Jane Street's breakdown and categorization of FRP, which stems from a talk by Evan Czaplicki at Jane Street. who wrote and designed the Elm programming language. Elm was initially based on FRP, but had abandoned signals from FRP as too hard for people to understand.

None of these are formulations of Elliot's version of FRP as he defined it. Instead, it's kind of a table of design choices you can make in your FRP system. Ideally, you'd be able to have all four properties, but some of them are in conflict with each other, so each row will have a single weakness in its design.

I don't put as much effort into connecting all the dots in the weekly lab notes, so if any of you have questions, I'd be happy to answer it. Or if a couple people say they want some idea in a lab note to be expanded into a blog post I can do that too. Just hit me up.

List of Resources this week:

- Model-View-Catharsis — Acko.net

- The Incremental Machine — Acko.net

- Climbing Mount Effect — Acko.net

- Reconcile All The Things — Acko.net

- Headless React — Acko.net

- On Variance and Extensibility — Acko.net

- React - The Missing Parts — Acko.net

- Get in Zoomer, We're Saving React — Acko.net

- Fuck It, We'll Do It Live — Acko.net

- KEYNOTE: Use.GPU - Declarative/Reactive 3D Graphics by Steven Wittens - YouTube

- 2016 Minsky Jane Street. Seven Implementations of Incremental

- William Byrd on "The Most Beautiful Program Ever Written"

[1] If you've ever had to use reduction or aggregating nodes or functions in streaming systems, you'll have run into this problem before.