Lab notes #011 value iteration and the LLM assistance problem

This week was a little different. The implementation work was supposed to be fairly trivial, but in practice, it took a lot of time. The implementation was for an iterator that iterates over all the values in the RRBT in order. In theory, it's pretty trivial. The core concept is just the body of the for loop of the iterative implementation of breadth-first search. There's a tricky bit, where you can't move to the next node until you iterate through all the values of a leaf node, but it's not too hard.

What did end up being quite hard was satisfying the borrow-checker. The children field of every internal node is an Rc<Rrbt<V, B>>. This makes sense, because an Rc<> is effectively immutable, and in a persistent data structure, you have multiple immutable references to the same node.

As a consequence, it seemed to make sense to make the queue inside of the iterator to also contain Rc<Rrbt<V, B>> as well.

pub struct ValueIter<V, const B: u8>

where

V: Clone + Debug,

{

queue: VecDeque<Rc<Rrbt<V, B>>>,

leaf_idx: usize,

}This ended up being problematic because, in the implementation of the `ValueIter`, the item type should be a reference, because we don't want ownership to transfer when we iterate over the RRBT. This also implies that we need a lifetime annotation associated with the Item, otherwise, Rust won't know how long to keep it around.

impl<'a, V, const B: u8> Iterator for ValueIter<V, B>

where

V: Clone + Debug + 'a,

{

type Item = &'a V;

fn next(&mut self) -> Option<Self::Item> {

// find the next value

}

}

The above code doesn't compile because there are no bounds on the lifetime annotation 'a in the impl trait, self type, or predicates.

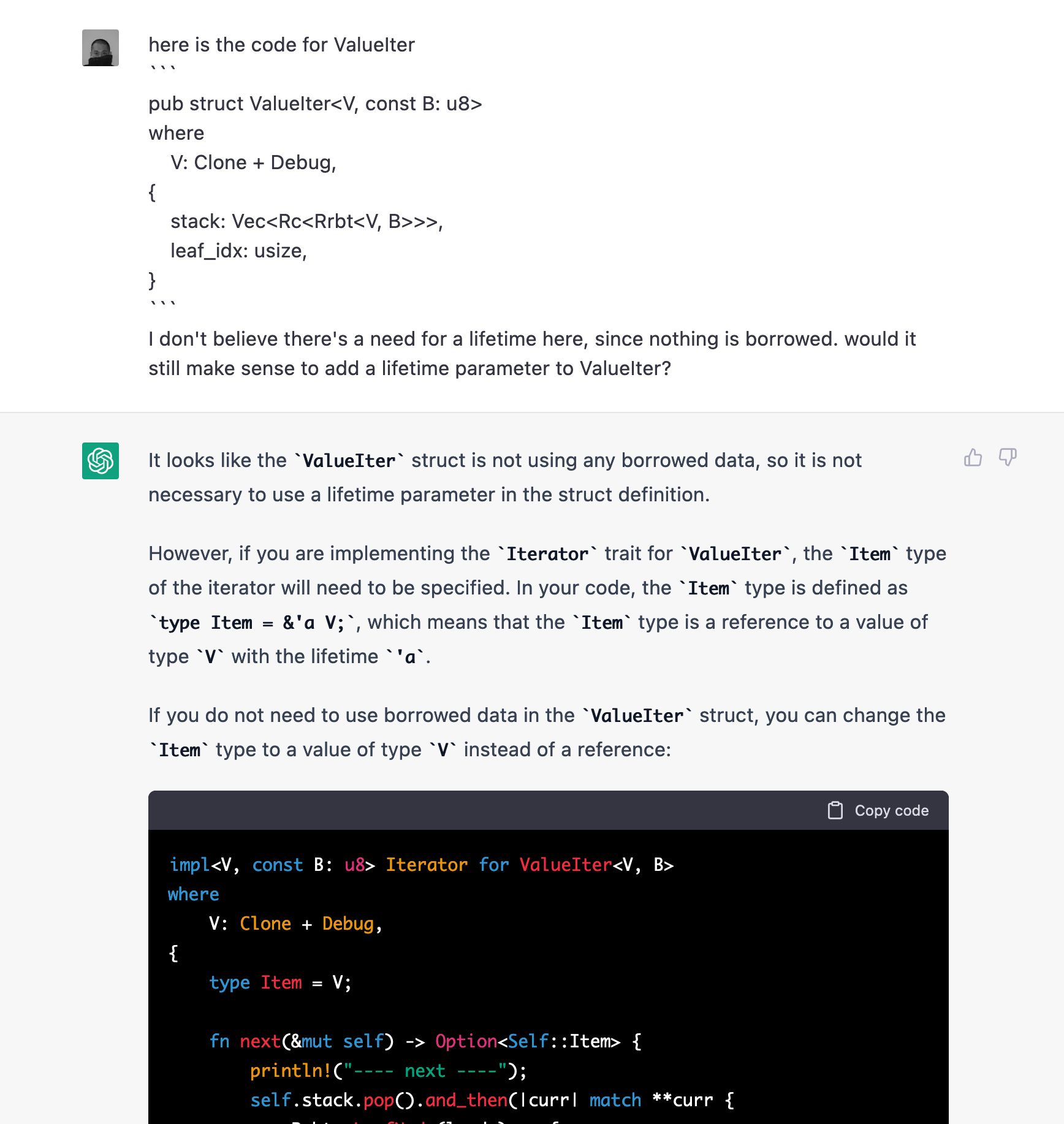

This is where I turned to ChatGPT to try to get some assistance. I posted the code that I had, as well as the error, to see what it could tell me.

The first go around, it simply suggested that I add lifetime annotation to the ValueIter struct. But the struct doesn't actually need lifetime annotation. We'd get errors about how it's being unused. So then I asked it about that.

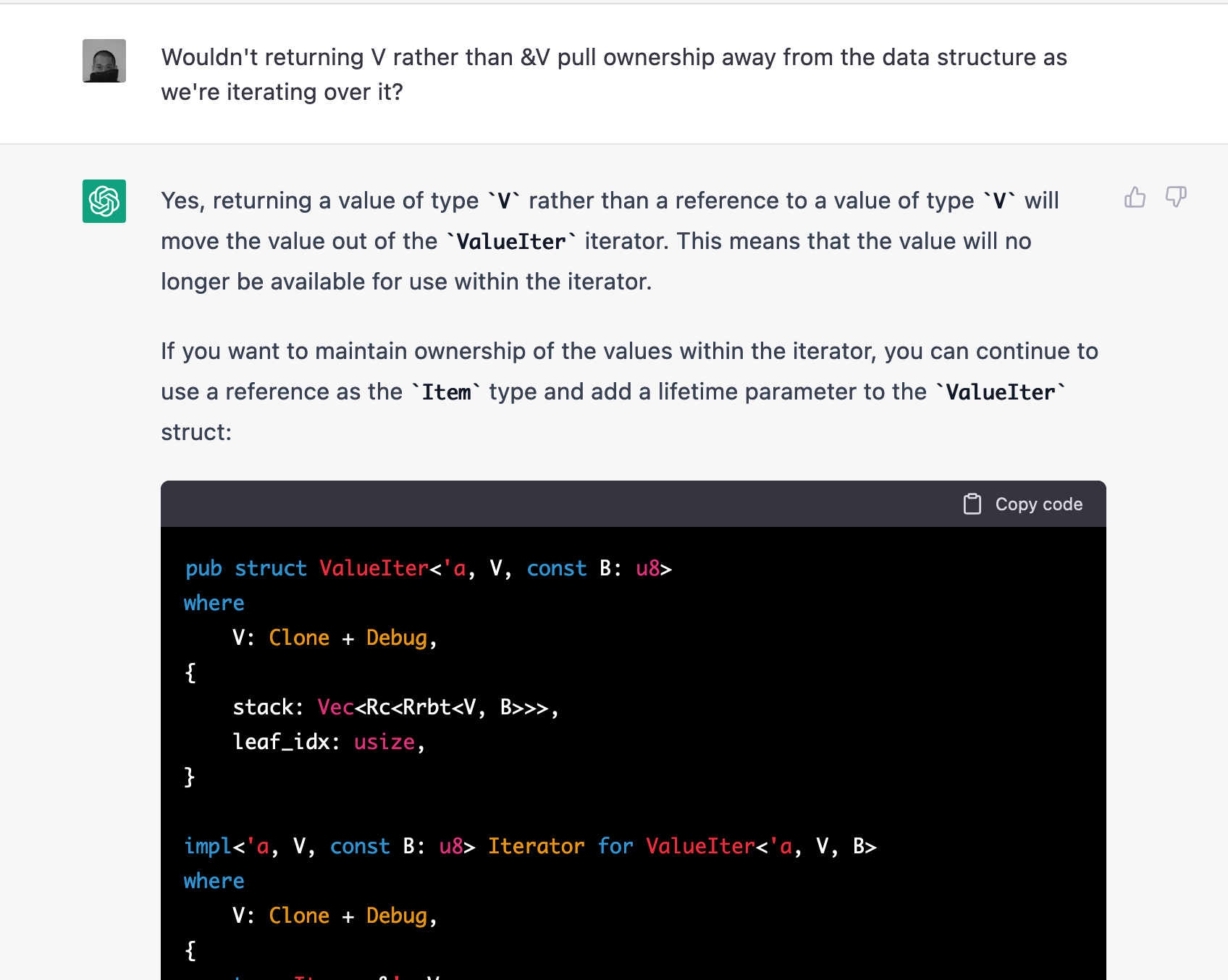

Then it was able to suggest that if that was the case, then Item shouldn't be referenced. Which puts us back at square one. And when I pointed that out, it just went back to the previous suggestion of adding lifetimes.

In addition, if we kept the queue (mistakenly named stack in the screenshots) an Rc<> without a lifetime annotated reference, once we dequeued a node, we couldn't actually return anything from the node. That's because the dequeued node is owned by the function, and returning anything from it would not survive the end of the function scope.

This is where I tried a variety of things, but after thinking about it carefully, the issue is that both the queue, "own" the node. What if, instead the stack holds references instead of Rc<>? It turns out that was the solution.

pub struct ValueIter<'a, V, const B: u8>

where

V: Clone + Debug,

{

queue: VecDeque<&'a Rrbt<V, B>>,

curr: Option<&'a Rrbt<V, B>>,

leaf_idx: usize,

}

This avoids the problem by giving a lifetime to ValueIter and allows for the return of the value contained inside of the node dequeued from the queue.

This is where I can't say that ChatGPT is particularly insightful in its advice. Like Rust errors, it doesn't seem to be able to do a "look-ahead" to see if the solution it offers will be at a dead end. That's not to say that future LLMs aren't going to be more powerful, but at the moment, they're pretty good at giving basic summary definitions, at least in my case, it's not been able to generate useful code for me.

Despite the negative result here, I'm bullish on the impact LLMs are going to have on programming. The impact is big enough to question the direction of my work here. Does it still make sense to keep going? What does an LLM-assisted programming language or environment look like?

Off the top of my head, this can go a couple of different ways, none of which are mutually exclusive:

- We use text descriptions to generate code templates that we then modify in our own code base. When LLMs get good enough, we could potentially just use LLMs to deal with complexity for us, and we only step in to tweak or review the code.

- We mostly use LLMs for architectural design work and rubber ducking. It helps generate ideas and suggestions instead of actually writing code.

- We make a new programming language or environment that not only has affordances for humans to understand but also affordances for LLMs to write.

It's the last one that seems the most interesting, but also with the most risk of failure. The momentum of existing platforms and technologies is heavy, as we've not been able to rid ourselves of the QWERTY keyboard and IPv4, it's unlikely that we'll rid ourselves of the complexity of our infrastructure and programming languages any time soon.

But this is something that I'll think more about it in the coming weeks, and how that influences the direction of my work.