Lab note #048 Basic Effects Done, Reactivity Next

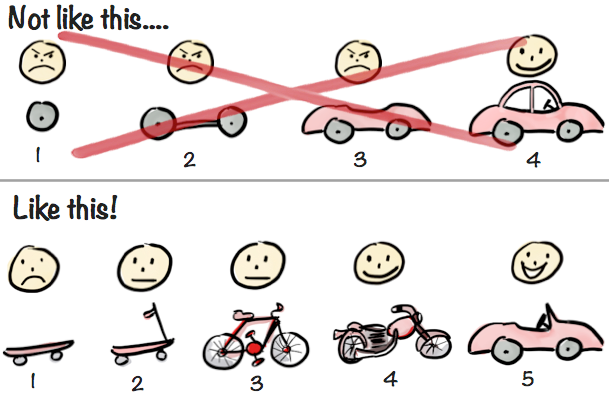

I spent most of the week getting a basic algebraic effects runtime working. The initial version is single-threaded, synchronous, no typing, with a single yield from handlers. For now, it's good enough for building the proverbial skateboard.

Only during implementation did I run into design questions I wouldn't have had otherwise. Just to cover two:

- How do handler lookups work?

- How do effects compose? Is it the handlers that compose? Or the effect descriptions?

How do lookups work and effects compose?

In most introductions to algebraic effects, you'll see them explained as resumable exception handling. It's because this is a familiar control flow construct for most programmers. In language implementations of algebraic effects like Koka, Eff, and Unison, this is how it works. You wrap effectful computations–code that can raise side-effects–in handlers of effects (effect handlers) like you do with try and catch blocks. When an effect is raised, the runtime looks up the nearest handler for that effect in the stack. But it seems like this doesn't have to be the case. You could have a flat key/value store of handlers, where the keys are the effect type. The advantage of handlers on the stack is that you can switch out different handlers for different situations, as you work through the computation.

The other question I had was how do effect compose? In fact, what is algebraic about algebraic effects? There is a paper and a talk (part 1 and part 2) that answers this question. I watched it a while back, but honestly, most of it didn't sink in. I tried again recently with the help of ChatGPT. [1] Here's the summary of what I got out of it.

Just as you have addition and multiplication on numbers, you can define a set of operations on some set of data. And just as there are commutativity and associativity laws on numbers, you can have equational laws on your own set of operations. It's this combination of operations and equational laws that determines how your effects compose. In Unison, they call this combination an Ability. Here's a concrete example with a state ability. Think of this as a formalization of useState from React.

Consider the effect of mutable state. To represent this, you need two main operations:

get: retrieves the current state.put: updates the state.

In a traditional imperative language, these would be low-level operations that interact directly with memory. But algebraically, you model them with equational laws like:

- get ∘ get=λs.(s,s) Two consecutive

getoperations should return the same state. - put(s) ∘ get=λt.(s,s) A

putfollowed by agetshould return the state that was just set. - put(s) ∘ put(t)=put(t)

putoperations should overwrite the state (i.e.,put(s)followed byput(t)is the same as justput(t)).

This formalization allows you to reason algebraically about state without concerning yourself with the lower-level implementation details. In Koka, Eff, and Unison, you never state these equational laws explicitly. The handlers imply the equational laws are held with their implementation.

The other way to compose effects is at the moment you're raising the effect. This is what Effect.ts does. At the time when an effect is raised, you can compose them with other effects, using pre-written effect handlers. [2]

import { pipe } from "effect"

// Define simple arithmetic operations

const increment = (x: number) => x + 1

const double = (x: number) => x * 2

const subtractTen = (x: number) => x - 10

// Sequentially apply these operations using `pipe`

const result = pipe(5, increment, double, subtractTen)

console.log(result) // Output: 2This sample from their docs is just to show the flavor of composition: effect descriptions are composed when they're being raised. So as far as I understand it, this design choice would change the effects runtime. Instead of just looking at a sequence of effects descriptions, and looking for their corresponding handler to execute, you can have a sequence of composite effects descriptions, which you'd need to traverse–like an abstract syntax tree. When you traverse leaf effect descriptions, you'd execute them, and when you traverse composition effect descriptions, you'd compose the results of the child effects.

The difference between the two approaches is basically the difference between driving stick and driving automatic. In Koka/Eff/Unison, you have control over how the effect inside an ability compose, and you have to decide how they compose, if at all with all the other abilities. In Effect.ts, the design of the effects and the composibility are written for you. All you have to decide is how to put them together.

I haven't decided what I'm going to do on this front, but will put a pin in it. There were other design concerns, such as how to make the runtime run concurrent effects? Turns out you need first-class continuations for that, and one-shot continuations provided by generators aren't enough for it. Once again, something I'll look into later.

Effects for Realtalk

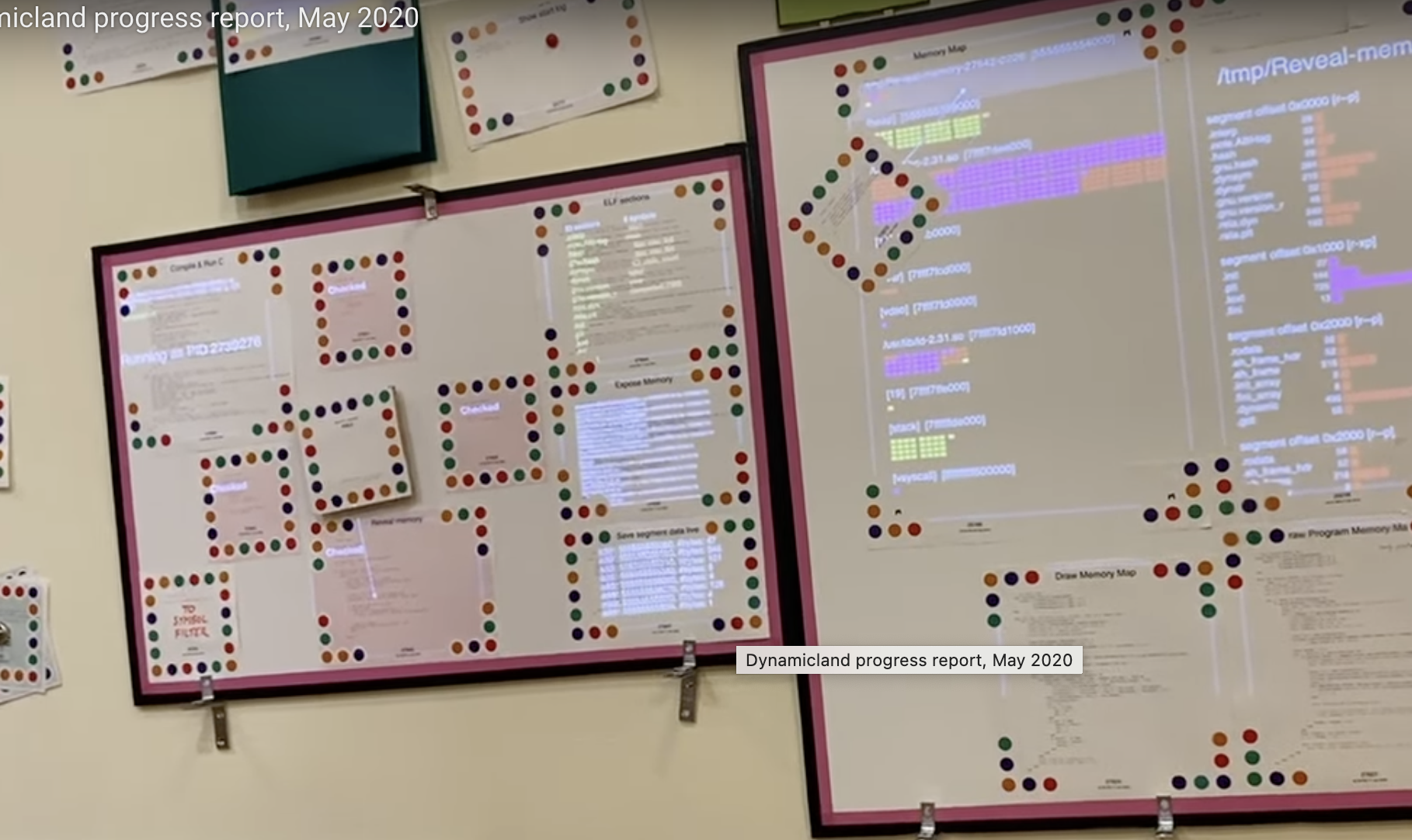

In the course, of musing about effects, I thought that notebook cells were a little like Dynamicland's colored dot papers (snippets?). But only superficially. They were only similar in that they were snippets of code that lived in some other environment.

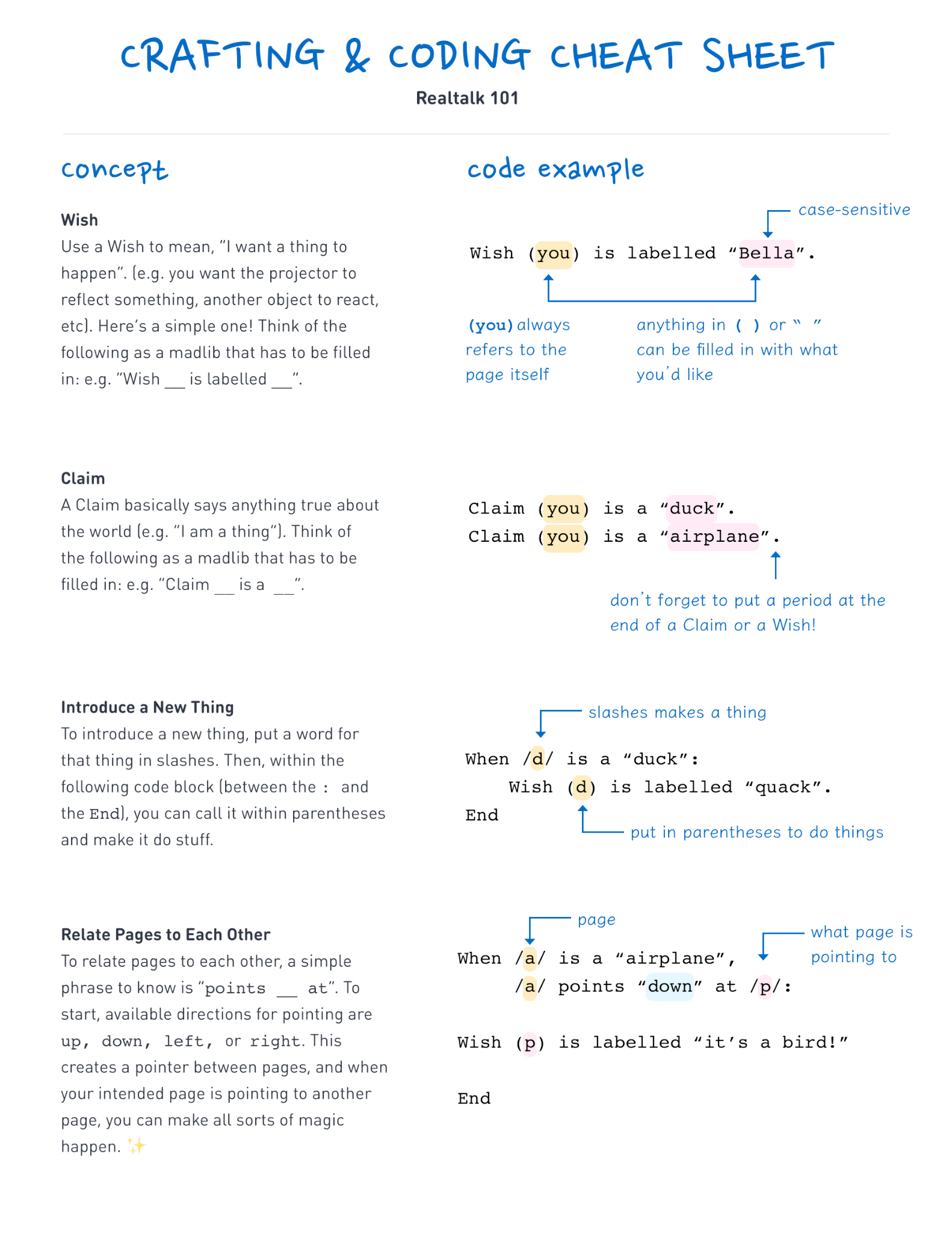

What would it look like if notebook cells could also act like these Dynamicland snippets? Could notebook cells also talk in Realtalk? Realtalk is a declarative language, and if you squint a little, it's like a prolog/datalog variant. Check out the cheatsheet:

How does this map to an effects system?

- "Wish" Statements: These can be thought of as requests for some change or action to happen. A "Wish" would correspond to triggering an effect in your system.

- "Claim" Statements: These are assertions or state declarations, which could be facts that get stored in the global state. Handling a "Claim" would involve modifying state or adding an entry in a data store.

- Events and Rules: Statements like "When /a/ is a 'duck', Wish (a) is labelled 'quack'." would be handled by capturing triggers that evaluate conditions and, when true, generate new effects or updates.

I haven't really done too much work in this area, but it seemed important. A glimpse of something I can't quite put my finger on yet.

Next steps

In the interest of moving quickly and making progress towards something I can use, I'm debating two paths. Reactivity or deployment first?

Reactivity is helpful for keeping the notebook in a working state for the user. But deployment lets me put the notebook to work.

I ended up picking reactivity, even though I had planned on doing deployment first. I'm a bit wary of this next part, because it's full of rabbit holes. Reactivity has a bunch of literature in Functional Reactive Programming (FRP), related to Incremental Computation (like Differential Dataflow).

I've started reviewing my notes on reactivity from the last couple of years. And a rabbit-hole question had already surfaced in my head: Is Functional Reactive Programming isomorphic to Logic Programming?

[1] For fun, I also plugged AE into NotebookLM for a podcast.

[2] If you've used Elm Cmds before, it feels like that.