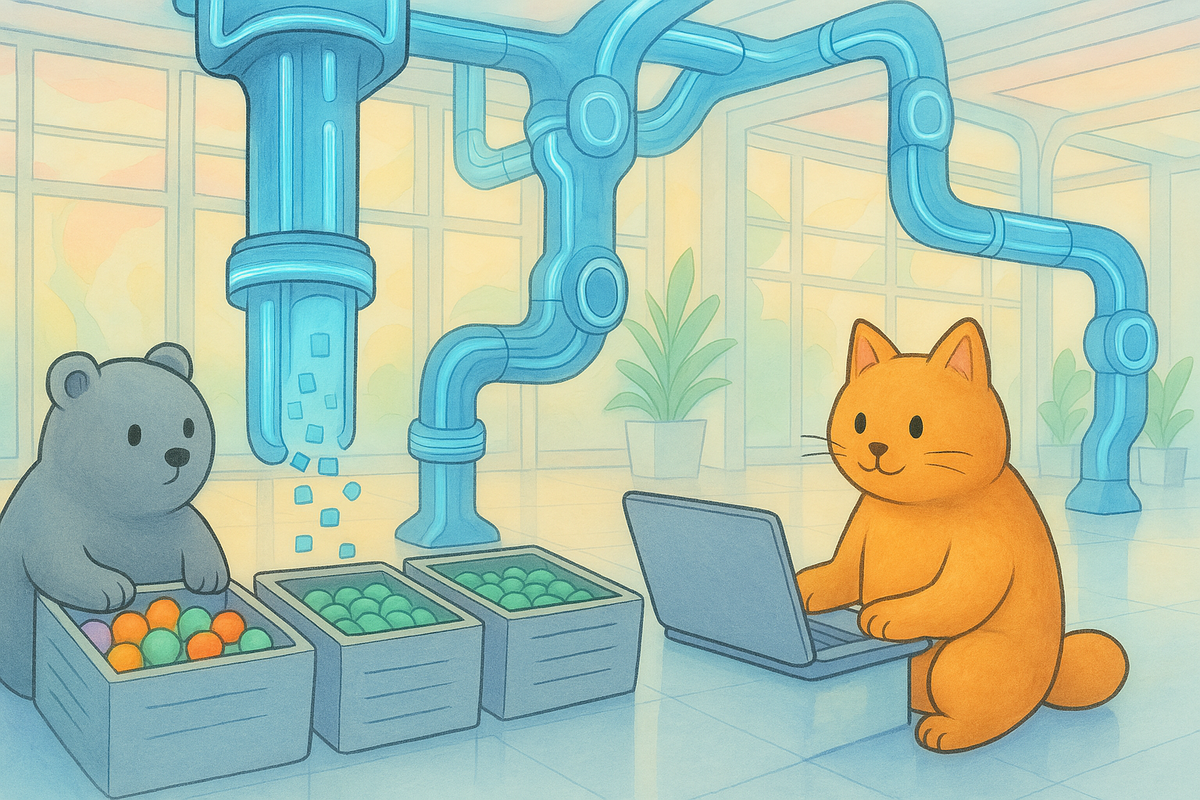

Lab note #067 Pipelines operate on collections of data

Wrapped type computing

Despite resisting the draw for writing an interpreter/compiler, I did think about it some more, and find it almost inevitable, given the properties and constraints that I have.

I went back to the three things I want the notebook to do: reactivity, collaboration, and observability. Under the banner of observability, I needed each run to be reproducible, and that can best be supported if the computation can be reified as a declarative data structure first. This separates declaring the computation from executing the computation, which means I'd have more control over the execution.

If that's the case, then not only would that allow multi-resumption, effect type checking, and reproducibility. There are also others, but it looks more and more inevitable. And as Andrew Blinn points out, this is a typical approach in functional systems: reify the computation as a wrapper, then use syntax sugar to make it palatable.

As a side note, the more I've been diving into this, the more I think JSX was a genius move. It's actually a compositional and declarative description of computation to send to the React runtime disguised as a declaration of the view. In order to have alternative execution models, you're going to have to either intercept program interpretation while it's executing, or create a description of the program and separate it from its execution. What you gain in execution flexibility you lose in developer affordance. JSX goes the latter route, but sidesteps the affordance problem by framing it as declaration of the view--which it is--but it doubles as a wrapped type that can change the execution model to a reactive one. I don't think enough people appreciate the slight of hand here.

Hence, the remaining problem then is how to make it a better developer experience? What is the syntax sugar that would work here? The advantage of direct-style everyone is used to in imperative programming is that the semicolon is assumed. We assume that when we execute one line the next line waits until the current one is done. So if we have that assumption we can focus on the operations. Hence, the problem with monadic composition is that everything is wrapped, so the focus is on the composition rather than the operations.

Haskell does away with this using do-notation (implemented in the compiler) and idiom brackets (implemented in userland). Elm embraces the composition, but tries to make it more understandable with |> (the pipe operator) and better names for the function composition (andThen). In my tests, I was using infix operators like math. But in the actual sample app for a Zig doc RAG pipeline, I find that I didn't use that at all. Most of what I was doing was function composition through wrapper types.

If that's the case, I think I can pull the trick that JSX did, but letting the output of a reactive node be a description of the pipeline. This does come full circle. My initial attempt at the notebook six months ago, tried to use a description of a pipeline, but I found that pipelines are DAGs, not trees, so a hierarchical description isn't really well-suited. But with some hindsight, I think we can break it down into two kind of branches: joins and splits. We do splits all the time when I do an indentation, so I won't discuss it much here. But Joins are problematic. GPT suggested something like SQL subqueries, where we can put a joining branch as an indentation.

pipeline(

source("users.json"),

transform(parse_json),

transform(filter_active_users),

join(

pipeline(

source("purchases.csv"),

transform(parse_csv),

),

on="user_id"

),

transform(calculate_user_scores),

destination("scores.json")

)Not the actual API, just a sketch.

So we can write write a joining pipeline as an indentation of the join. The above represents the following pipeline.

purchases.csv users.json

│ │

parse_csv parse_json

│ │

│ filter_active_users

│ │

└───────────────┬──────────────┘

│

join(on=user_id)

│

calculate_user_scores

│

scores.json

An alternative is to do something like Hoon, where there's a backbone of glyphs that specify how each operation composes, so the focus can keep on the operations and the transforms.

Finally, I'd need to find out whether I can keep fine-grained reactivity with such a representation. In React, the unit of granularity is the function component, which may not be fine enough. I'm not sure how to tackle this yet.

LLM-simulated customer development

In a different direction, I did an experiment to do customer development with a simulated persona with an LLM. I first used the LLM to help me write the prompt. Based on the Mom-test, I constrained how the persona would respond, and emphasized that it should object when a problem I was asking the persona about really didn't line up with its simulated experiences.

Then I fired it up with the problem I'm solving with reactive notebooks, and various personas. I tried it both with people that I thought would be good fits (AI engineers building RAG pipelines) and people that would be terrible fits (coffee baristas).

It wasn't what I expected. The reply from the persona was far too transparent. It fit too well like a glove with the problem that I laid out. The only way that I could tell that it wasn't just catering to me, was the concrete details that it filled in that made the testimony consistent with the problem. Suspecting the LLM was catering to me, that's why I tried a coffee barista. The coffee barista persona completely didn't relate to the problem I laid out. So I guess something is working.

Hence, there isn't really much of an interview. The reply from the persona seems to be so clear-minded and a fit with the problem that it almost seems like the easiest customer development interview ever. Real customer development interviews are like taking a stab in the dark, where the interviewee doesn't even know how to articulate their problem, and can only talk in terms of their current experience.

I don't trust the responsees right now, but I think this can be a very helpful tool to zero in on a zeitgeist of sentiment with in a customer segment given an articulated problem. What it currently doesn't tell me is whether this is the biggest problem, because often times, customers will tell you that this is a problem (great!), but they don't tell you it's not the biggest problem on their mind and hence won't actually move to purchase even if you slap their face with the solution.

Chunks of data

Resisting the urge to tackle wrapped type computing, and instead try implementing sample apps and pipelines was the right call. I went back to implementing a toy RAG app to answer questions about Zig. Some things that jumped out as being apparent as a result:

Streaming could use multiple resumption

Before I thought websockets would be the only reason for multiple resumption, but in many AI applications, the LLM can't generate the response fast enough, so they stream the response. Receiving streamed responses in a pipeline would be a great reason to support multiple resumption. It greatly simplifies the API and the mechanism of handling the the partial data can be cordoned off in the effect handler for customization and composition.

Pipelines operate on collections

In the tests for my implementation of a reactive system, I often thought of state much like reactive frameworks for UI does: as disparate, primitive types. However, in pipelines, we're often working with collections, especially those that don't fit into memory. This changes the affordance and efficacy of a reactive system as typically designed.

When a single field or item in a collection changes, it shouldn't trigger a reactive node to recompute results for all elements in the collection. That's what currently happens when we rely on the pure function's properties of determinism and referential transparency to control the incrementalism that powers reactivity. To fix this, the mechanism for incrementalism must be more fine-grained to account for individual element changes.

One way to do this is to make reactive nodes operate on a single element in a collection. This is probably the simplest, though I'm not clear on what an API would look like.

The other way is to use differentiable data structures so you know how and what data has changed. This is currently most attractive, but I'm not sure about the overhead and limitations.

Fetching paginated queries

As a result of recognizing that most of the state would be collections, it's currently awkward to have a effect that fetches paginated queries. This is another argument for multiple resumption.

Without it, there has to be some controller loop or context surrounding a effect to fetch a partial result.

I forgot to include the links for last week, so you can check them out on the web page for last week's notes. This week, I found the following stuff interesting:

- E-graphs Good is an explainer on e-graphs. It's a way of expressing rewrite rules in a programming language, so that you can exhaustively explore many equivalent programs by representing the program with equivalent classes of expressions.

- How to Write Blog Posts that Developers Read. I admit it's hard for me to get to the point when writing the intro for any blog post. It's a good reminder.

- I did Advent of Code on a PlayStation. What could a fully immersive visual coding look like? Using Playstation Dreams environment gives a hint.

- Interaction Nets - A way to do rewrite rules for execution of programs. They're slow, but one advantage is that if you can write it sequentially, they're easy to parallelize.

- A great explainer on why NAND gates are a universal gate. How come you can use them to construct any logical circuit?

- Found-Money Startups - A nice perspective on what makes money. Sometimes, if you say the obvious thing out loud, it reframes your thinking a bit. I'm surprised that Google and advertising in general wasn't an example. Advertising is often used in search of new customer segments to grow your business.

- People can read their manager's mind - though I have no employees, I read this as a way to not fool yourself when you do.